"org.openqa.selenium.WebDriverException: Element is not clickable at point (411, 675). Other element would receive the click: ..."

The above-mentioned error message is well known by most QA and developers, who work with Selenium. Sometimes it makes perfect sense, but it can be quite confusing in other cases, especially when the method ‘Driver.findElement()’ is returning true for the element you are trying to select. It just so happened that I was struggling to fix this error, right before I saw the saw the schedule for the Selenium Conference 2016 in London. I noticed a talk about the flawed vision of Selenium, and signed up for it, hoping to find out why the ‘findElement()’ method doesn’t work as expected and how other people deal with the issues caused by this flawed vision.

The title of the talk was: ‘Is It or Is It Not Really Visible Selenium’s Flawed Vision ‘. As expected, the speaker started off by mentioning the ‘findElement()’ method and added that to understand why it sometimes produces unexpected results, you would first need to know how webpages are rendered in a browser.

Rendering of webpages

This introduction didn’t really provide any new insights. He explained the rendering of a webpage by using the URL ‘Google.com’. You type the URL and you click on the link or press enter. If the link is valid, information is sent to the browser and it will start downloading and parsing the HTML. To be able to do this, it needs to make sense of it. While parsing, it will build a DOM tree, which is a tree-like representation of a web page (the ‘findElement()’ method searches this DOM to find elements). The basic structure looks like this:

Document -> the root element (usually the HTML element) -> the elements (nodes) inside it (head, title, text, etc.)

However, at this stage, the browser knows nothing about what needs to be rendered yet. It will gather all information first, for example stylesheets and scripts. After that, it will start parsing the information and will need to figure out what needs to be rendered. To be able to do this, the data will be sent to the browser in a special format. This format is called the ‘display list’, and needs to be generated (as described in the CSS specifications). It will generate the list by using tree traversals. It will travel from top to bottom, applying some styles, and travel from the bottom to the top again. It can repeat this process up to a maximum of five times.

After this introduction, the speaker went on to explain what browsers are not doing when building display lists, but what would make test automation a lot easier. When doing top to bottom and bottom to top traversals, the browser will create the display list but it never optimizes it. In other words, it never asks itself if an element should be rendered or not. The reason for this is that it leaves that to the CPU or the GPU, as they are a lot faster in processing the information. It will tell them to handle it. So instead of checking if elements should be rendered or not, it will tell the OS to do overdrawing. This means elements can be stacked on top of each other, but the user would only see the last drawn element.

What does Selenium do?

Although the information that the speaker gave us was interesting, I was happy when he moved on to explain what Selenium does after a webpage is rendered and how its test automation works. He said Selenium is built by reverse engineering. The selenium committers, companies and other contributors combine their knowledge of what browsers are doing when generating display lists with all answers that come up along the way and try to reverse engineer it.

For example, when the ‘Element.is_displayed()’ method is called, it will kick into action a few actions:

-> Get a list of all elements between the element being tested for visibility and the document root along a branch of the tree

- Loop through the list, if not visible return false

- Checking if the element is displayed or if they are visible.

- Work through elements that might have an opacity of 0 since that is not visible

The Webdriver specification describes it as follows:

(Source: https://w3c.github.io/webdriver/webdriver-spec.html#widl-WebElement-isDisplayed-boolean):

- visibility != hidden

- display != none (is also checked against every parent element)

- opacity != 0 (this is not checked for clicking an element)

- height and width are both > 0

- for an input, the attribute type != hidden

So it takes the element, retrieves all of its parents, loops through them, and asks the same question for all of these elements: is it visible? If one of them isn’t visible, the ‘is_displayed’ method will return false. All of these queries are being done using Javascript.

Reality

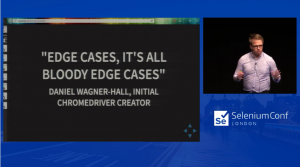

It sounds easy, but as I already showed with my opening quote, reality is quite different. The speaker confirmed this by mentioning that he himself and several (former) colleagues have had many sleepless nights because of the visibility checks not being easy at all. He ‘blamed’ it on the CSS working group that didn’t stop working, the rise of Mobile code and Javascript being bad at sharing code between browsers. This means there is an almost infinite number of edge cases.

He gave an example of one of these edge cases: a problem he encountered when working with Firefox OS (which apparently has a very large number of edge cases). Selenium told him a certain element was displayed, but that wasn’t possible. One of the developers used ‘CSS transform’, and transformed the element way off the page. It was so far away, there was no way you could still click on it.

I encountered another edge case myself, while working with popups in an application we work with at Caplin Systems. There were two popup tickets, one in the center of the page and the other partially overlapping it (on top of it). Is the first ticket visible? Selenium says yes. From a coding point of view it is impossible to see if it is stacked on top of each other or not, as the display list doesn’t always give this information. The same goes for the browsers. As explained before, they are designed to be as quickly as possible, they don’t care about details like visibility. Chrome driver seems to be a bit smarter, as it warned me the first ticket was visible, but not clickable at the same time.

The speaker ended his talk by mentioning ‘Is_displayed’ has limitations, but that you should still use it. It is working as well as reasonably possible, and can improve even more if users like me raise issues and concerns with the Selenium developers.

Conclusions

Although it was an interesting talk, I was a bit disappointed he only described the problem I was already aware of. I was hoping for some solutions or tips as well, but there was unfortunately no more time for this. On the bright side, this forced me to think about it myself.

I think the first thing you need to realize, is that although the visibility errors are annoying, they make sense from a logical perspective. In my opinion, it is bad test logic to manipulate hidden elements, as users will not be able to do this. The fact the ‘is_displayed’ method doesn’t always return the expected results, doesn’t have to be a big issue, as long as you are aware of its limitations and are looking at your tests from the users point of view. Instead of manipulating the hidden elements, you could try to make a valid test case that makes the element visible (like scrolling or moving other elements aside first).

Another solution to the visibility issue and in my experience the most important one, is using the right selectors. For me, most of the visibility issues were caused by not using an optimized selector. Ask yourself if you are looking at the right element, the right level and if you need to be a little bit more detailed. This way you can at least prevent selecting the wrong element or a container that is (partially) disabled due to another element being on the same location. To use the best selectors possible, it might be wise to talk to the developers as well. Using IDs for testing purposes doesn’t make the code that much less clean, but it would make test automation a lot easier. On top of that, it would also increase the test awareness of the developers. Thinking about how elements can and will be tested and what kind of impact altering elements might have, will most definitely not hurt.

Even though the outcome of the talk was slightly disappointing (due to the lack of tips), thinking about how to deal with the issue increased my awareness of the topic and will hopefully lead to improved test automation code.