This article expects that the reader knows the concepts of containers, Liberator and how Liberator serves container subscriptions. Checkout this for more information.

In brief, a container object holds a list of record names, which are considered as the elements of the container. It’s typically used to hold a list of financial instruments that the client application displays in a grid format. If a subscription is made for a container, a liberator serves the client with the list of records in that container, which is followed by updates for each record. The larger the container, the longer it will take to get all the updates, depending on several variables. For a financial trading application, it is necessary to ensure that the latency in receiving those elements is reduced to a minimum.

As a project for Dev Week 2017 in Caplin, I worked in automating the Container benchmark process because we wanted to benchmark performance of Liberator to handle containers in different scenarios. I had previously created a benchmark application, which required some additional touch ups and configurations.

The agenda for Dev Week was:

- Configure the tests to run in CI and create a CI pipeline for it

- Add a feature to persist the recorded data in a database

- Display graphs in a web interface using a suitable tool

- Add a feature to compare data with previous benchmark data

In this blog, I have tried to explain about all the scenarios that were required to be considered for container benchmark, what was achieved in devweek, and what still needs to be done.

BenchMark Scenarios

Time taken for getting all subscribed container records depends on several factors such as the number of records in a container, size of each records in that container, setting params like window size, yield size and so on. Window size is a parameter used to set size of container which specifies how many records will be send back in the container, if the size of container is larger than the set window size. To know more about windowing check here . Setting container yield size config in liberator, sets containers in batches of rows to prevent serving of a large container, from delaying other operations in session thread. More information about container-yield-size here. Setting these variable, clearly affects the time it takes to serve the container to it subscribers. Hence, they need to be considered when performing the benchmark. For the benchmark, I have separated the time it takes to receive only list of records in the container and the overall time which also includes the record updates. Thus there are several scenarios that needs to be considered when benchmarking container. All assumed scenarios are listed below, which are self explanatory.

| 1 | Small Container, Small Records, With Window, Ignore Record Updates |

| 2 | Small Container, Small Records, With Window, Include Record Updates |

| 3 | Small Container, Small Records, No Window, Ignore Record Updates |

| 4 | Small Container, Small Records, No Window, Include Record Updates |

| 5 | Small Container, Large Records, With Window, Ignore Record Updates |

| 6 | Small Container, Large Records, With Window, Include Record Updates |

| 7 | Small Container, Large Records, No Window, Ignore Record Updates |

| 8 | Small Container, Large Records, No Window, Include Record Updates |

| 9 | Large Container, Small Records, With Window, Ignore Record Updates |

| 10 | Large Container, Small Records, With Window, Include Record Updates |

| 11 | Large Container, Small Records, No Window, Ignore Record Updates |

| 12 | Large Container, Small Records, No Window, Include Record Updates |

| 13 | Large Container, Large Records, With Window, Ignore Record Updates |

| 14 | Large Container, Large Records, With Window, Include Record Updates |

| 15 | Large Container, Large Records, No Window, Ignore Record Updates |

| 16 | Large Container, Large Records, No Window, Include Record Updates |

Achievements

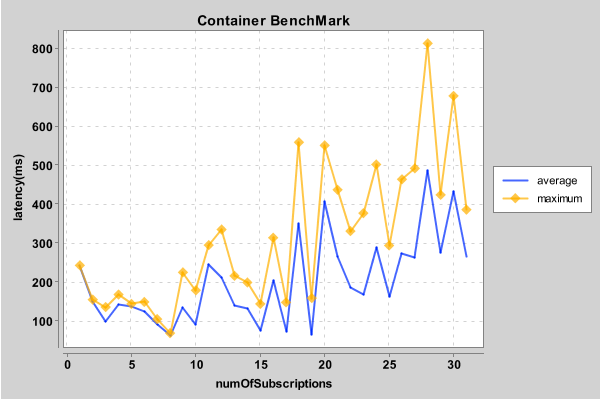

The test consists of a properties file for each scenario, which can be edited to set several params such as maximum latency, maximum number of user subscriptions, window size, container size, number of rows in container and so on. In CI, benchmark tests for above set of scenarios are run with container-yield-size set (which is by default), and without container-yield-size is set. Two separate CI pipelines are created for this purpose. The benchmark test was configured so that a single gradle command would set up all necessary environment and all scenarios are run sequentially. The data recorded would then be persisted in a MySQL database. This test is run in CI pipeline and is triggered each time a 7.0 liberator kit is generated. With each build, separate graphs are generated for each scenario. An example of graph is displayed below for a specific scenario.

Fig. Container benchmark graph for scenario: SMALL_CONT_SMALL_ROWS_WITH_WINDOW_INCLUDE_RECORD_UPDATES

ToDos

Although most of the goals were achieved during the devweek, the project could not be completed. The plan for dev week was also to ensure that the collected data for this benchmark, would be used to display real time graphs in web UI using appropriate web tools. But due to time constraint that could not be achieved. Hence the next steps to complete this project would be to generate graph in web interface for those different scenarios, which would be updated with new data, each time the CI build for benchmark is run. In addition to generating a real time graph, it’s necessary to compare the result with the previous results to ensure that there is no performance regression. Another feature can be added in the benchmark tests, which compare the results and perform some action like sending an email or triggering a build fail, if there is performance regression.