QAFFL is nothing to do with Harry Potter, despite the name and possible Quidditch connection. In fact, it is a one day conference focusing on QA in Finance: a series of talks and panels covering QA practices and experiences, with established banks, challenger banks, regulators and the audience all providing their own viewpoints.

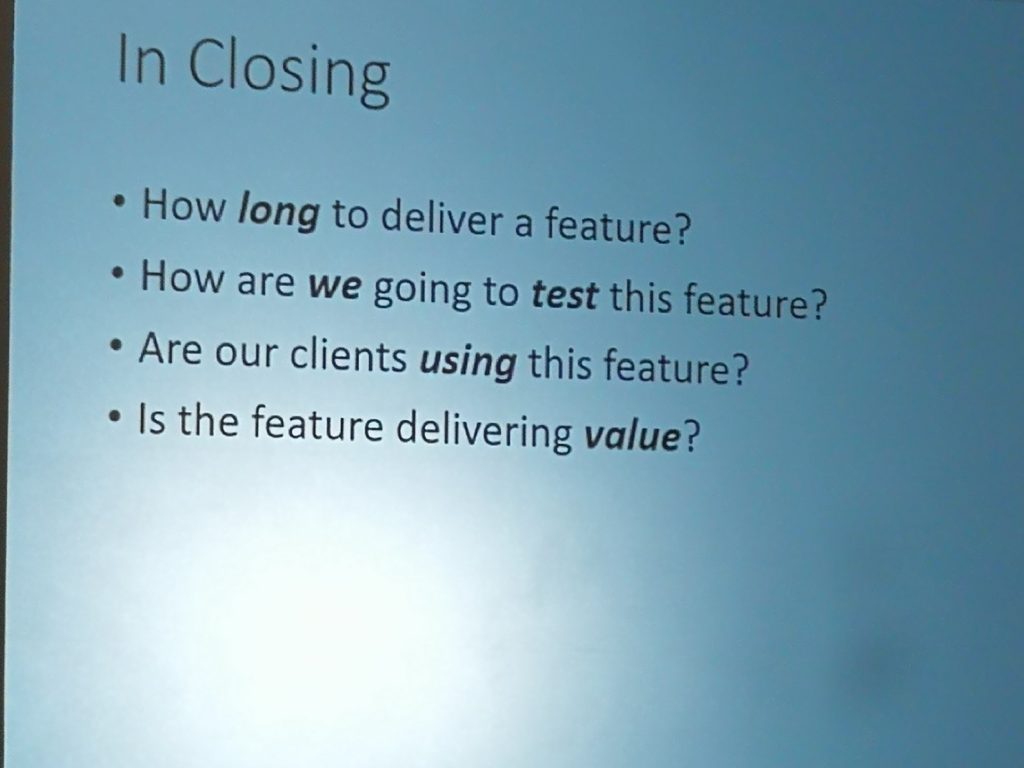

The standout talks of the day for me were Matt Davey from Goldman Sachs, Allan Woodcock from Lloyds, and the excellent closing talk from Hassan Bugti from Saxo, who explained how the QA cannot be the monster behind the door, but needs to get involved in every meeting and be a fundamental part of the software development process from the beginning. Hassan also left us with a reading list to refer back to, including ‘The Checklist Manifesto’ and ‘The Phoenix project’, both of which are already popular at Caplin. Hassan, you are on our wavelength, and we can’t wait for the English translation of the final book you recommended: ‘Jytte fra Marketting er desvaerre gået for i dag’ or ‘Jytte from Marketing is unfortunately out for the day’, currently only available in Danish.

Matt Davey’s talk was a smorgasbord of techniques and tools that have worked for him in different roles. In particular Objective Key Results. He also re-emphasised the value of Agile staples such as Definitions of Done and Acceptance Criteria, and ensuring that you know where they are and that all stories have them. And also the importance of Realised Business Value, especially in relation to the Sprint and Story level. Can you measure and confirm what Business value there is in the current sprint? Can you point to the realised business value for each story in that sprint?

Matt also continued the ongoing theme in the conference: SHIFT LEFT. It was mentioned in several of the talks, and means pushing quality to earlier in the cycle. For example, ensuring that you have development-ready stories, and that you don’t just have tests, run tests, and know your tests’ coverage, but that you also store your test results in a Test Results Database. A database that you can then search for patterns, and that you can mine for data to help ensure quality in the future based on your experiences in the past.

Matt’s advocated practices included Chaos Engineering, Incident Management metrics, Site Reliability Engineering and Team Health. He recommended the Spotify Team Health scoring system, and running it quarterly or yearly to see how the team health scoring matches up with the Objective Key Results metrics.

Allan Woodcock from Lloyds had already been part of a panel discussion earlier in the day. During that panel one of the other participants had highlighted that the two biggest issues for QA Departments in ensuring quality in Financial applications were Data and Environments.

Data is an issue because there are strict rules around using customer data from both privacy and regulatory points of view. So obtaining representative data to test with is always a challenge, as is ensuring that those data sets remain consistent across testing runs.

Creating environments that are representative of production are just as much of a challenge, especially in the age of cloud-based hosting.

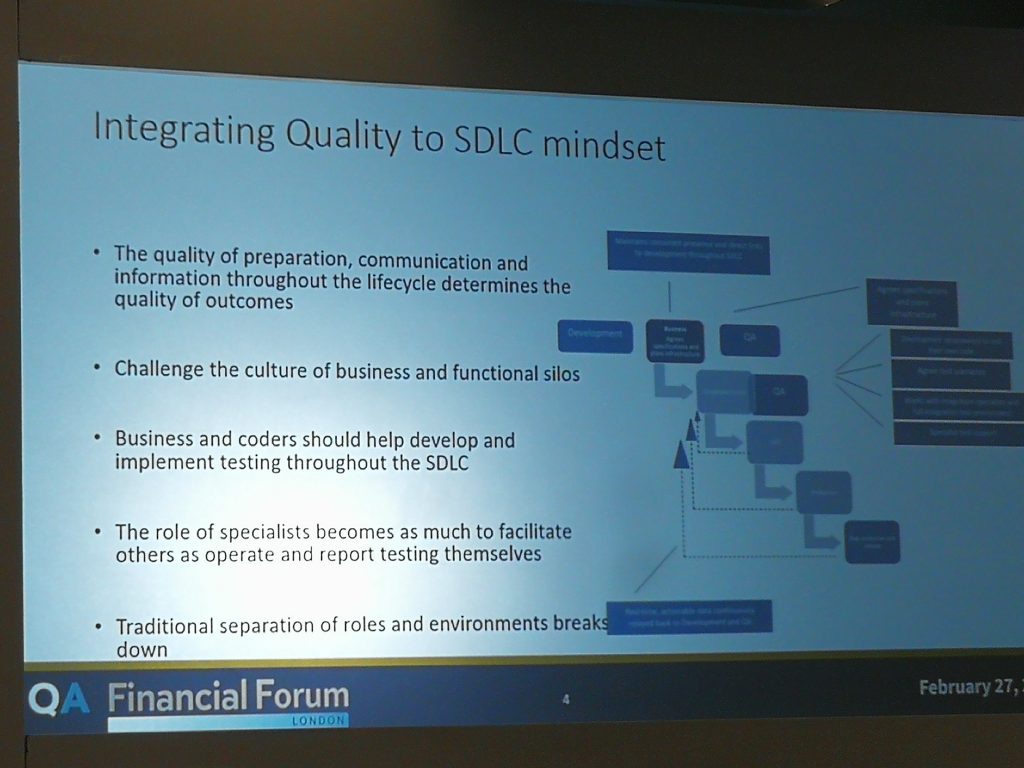

Allan’s main talk concerned the continuous challenge of innovating in terms of the SDLC, the tools used, and position of the QA function to them. In particular, Allan highlighted the sheer number of different tools used by the numerous QA teams that work within the Lloyds organisation, an organisation that employs 19,000 people.

Nonetheless, Allan was keen to stress that they do innovate, and that there are ways in which an organisation of that size can safely do so. It is something that large organisations need to prioritise, as horizontal scaling is far more effective than multiple teams doing their own tool discovery of the same tools and techniques. This was reinforced for us when we were offered a better version of the image above by the Lloyds QA tools research team, who were sat behind us and watched me take that photo. We had a good talk with them after Allan’s talk, and we will now be investigating Headspin for mobile testing.

Overall it was a great conference for us to attend, and many thanks to Matthew Crabbe and team for putting it all together. Hopefully we’ll see everyone again next year.