As the financial comet-streaming engine space becomes more commoditised, vendor selection processes for market data distribution and trade capture projects are both becoming more commonplace and more exacting in their functional and non-functional requirements.

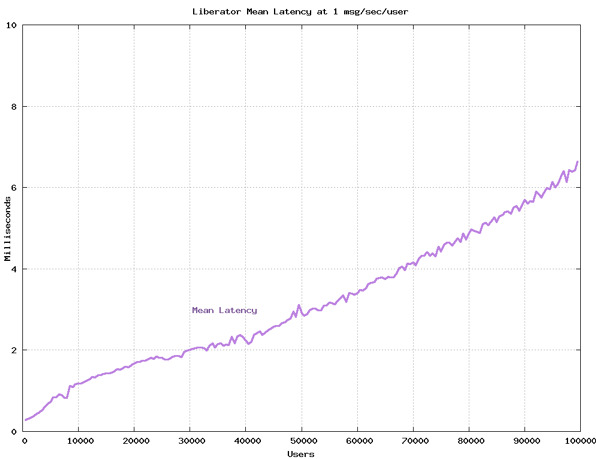

One typical non-functional requirement is for high comet engine scalability in terms of message dissemination and distribution to a large concurrency of client sessions. This is for good reason; scalability defines not only the number server boxes and data-centers that must be purchased but also the latency of the client connection. Latency subjects banks to market risk and increases the likelihood of trade rejection, damaging the precious bank-client relationship.

Caplin Benchmarking Tools

As Caplin Liberator has been designed from the ground up to be the most scalable distribution engine, Caplin has been promoting Liberator’s scalability for a number of years. In order to help banks run their own benchmark use cases, Caplin developed benchmarking tools to facilitate exactly these sorts of tests.

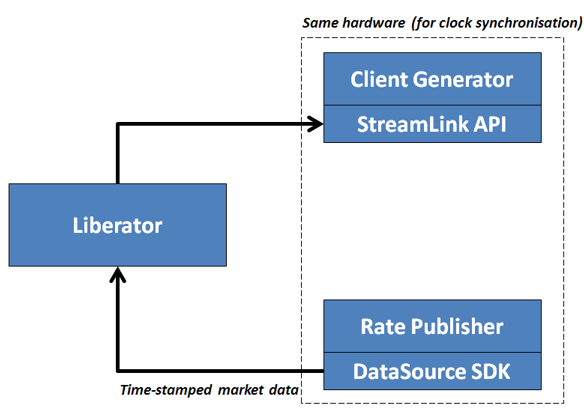

The Caplin benchmarking tools comprise a rate publisher which generates market data and a client generator which generates client sessions. The rate publisher generates market data updates for a configurable number of instruments at a given message update frequency and size. The client generator generates a configurable number of client sessions, which subscribe to a given number of instruments.

Benchmarking usually assesses metrics such as the end-to-end latency (publisher to client), the round-trip latency (client to publisher to client) and other metrics such as the server CPU in each core and memory usage.

Publications of Liberator’s scalability are regularly made to keep up to date with software revisions and modern hardware specs. Martin Tyler recently blogged about Benchmarking Liberator to 100,000 users and also discussed Real World Benchmarking Scenarios. You can see the latest results here.

An example benchmark use case: distribution to 100,000 client sessions with an average latency below 10ms.

Benchmarking without Benchmarking Tools

Caplin strongly advocates the use of its benchmarking tools, as implementing scalable a rate publisher or client generator is a difficult technical challenge. Caplin’s client generator can generate many thousands of clients sessions without impairing latency measurement.

However, a few institutions closely involved with comet engines have recently raised doubts over the reproducibility of some vendors’ published results. In response, some vendor selection processes have prohibited the use of vendor provided tools in scalability assessment.

In order to help our clients benchmark Caplin Liberator without the use of Caplin benchmarking tools, here are some guidelines in creating a simple benchmarking framework.

Benchmarking Architecture

Example benchmarking architecture

Building a Benchmarking Rate Publisher

On initialisation, the rate publisher should create and start a DataSource instance. The DataSource will connect to Liberator, providing connectivity for rate publication.

In order to instantiate a DataSource instance in Java, pass in a DataSource XML configuration file to the DataSource constructor.

public class Publisher

{

DataSource datasource;

static final String DATASOURCEXMLPATH="conf/DataSource.xml";

static final String FIELDSXMLPATH="conf/Fields.xml";

public Publisher() throws IOException, SAXException

{

/* Initialise the DataSource from XML config */

System.out.println("Starting DataSource...");

File dataSourceXmlFile = new File(DATASOURCEXMLPATH);

File fieldsXmlFile = new File(FIELDSXMLPATH);

this.datasource = new DataSource(dataSourceXmlFile, fieldsXmlFile);

this.datasource.start();

}

}

Documentation and examples of DataSource and Fields configuration fields are provided within the DataSource SDK. You should configure the DataSource to connect to the Liberator instance by hostname or IP-address; Liberator should be the only running process on that box.

In order to generate an instrument update and send it to Liberator for distribution, the update must be assigned a subject, such as “/EQUITIES/MSFT.O” and a set of field-value pairs:

public void publish( String subject, String bid, String ask )

{

DSRecord record = DSFactory.createDSRecord(this.datasource, subject);

record.addRecordData("TimeStamp", System.nanoTime());

record.addRecordData("Bid", bid);

record.addRecordData("Ask", ask);

record.send();

}

The rate publisher framework should invoke the publish method for each instrument required, at the required update frequency and with the desired payload. Note that a timestamp is appended to each message at source, allowing the end-to-end latency to be measured by the client generator.

Building a Benchmarking Client Generator

In order to generate client sessions you will need to use the client-side StreamLink API. Each client is a separate StreamLink instance, and subscribes to a set of instruments by subject.

In order to generate a StreamLink client instance in Java, pass in a properties file into an RTJLProvider:

public class Subscriber extends RTTPSubscriberAdapter

{

private static String PROPERTIES_FILE = "conf/rtjl.props";

private RTTPBatchReadWrite readWrite;

public Subscriber() throws FileNotFoundException, IOException, RTJLException

{

/* Initialise the client from a properties file */

Properties props = new Properties();

props.load(new FileInputStream(PROPERTIES_FILE));

ReconnectingRTJLProvider provider = new ReconnectingRTJLProvider(props);

provider.setRTTPSubscriber(this);

readWrite = provider.getRTTPInterfaces().getRTTPBatchReadWrite();

}

}

Documentation and examples of Java properties files are included within the StreamLink for Java API. The StreamLink client should be configured to connect to the HTTP/HTTPS port of your Liberator instance.

For each client session required, the client generator framework should generate a Subscriber instance, and each such instance should subscribe to a watchlist of instruments.

In order to subscribe a client to a list of subjects:

public void subscribe( String[] subjects ) throws RTJLException

{

RTTPTarget[] target = new RTTPTarget[subjects.length];

for (int i = 0; i < subjects.length; i++)

{

target[i] = RTTPTargetCreator.get(subjects[i]);

}

readWrite.request(target);

}

Clients will receive publications for each subsribed instrument via a callback method ‘recordUpdated’. This method provides updates as field-value pairs:

// called on tick arrival (extends RTTPSubscriberAdapter)

public void recordUpdated( String subject, Hashtable nameValuePairs, boolean cached )

{

String timestamp = (String)nameValuePairs.get("TimeStamp");

long timeSent = Long.valueOf(timestamp).longValue();

long timeNow = System.nanoTime();

long timeDelta = timeNow - timeSent;

System.out.println("Latency:" + timeDelta + " nanos");

}

Every client instance measures the latency of each message by comparing the message timestamp with the present time. In order to prevent clock differences affecting results, the publisher should be situated on the same box as the client generator.

Latency values can be pushed into a database or statistics package for analysis like min, max, avg message latency, outliers and stddevs.

Please note that the simple benchmarking framework outlined above is much less optimal than the Caplin Benchmarking tools. You may need a large number of client generator servers to generate sufficient load on Liberator.

Download the sample source code for this project here: benchmarking-src.